Home /

Expert Answers /

Computer Science /

problem-2-20-points-consider-the-following-markov-decision-process-mdp-with-discount-factor-pa544

(Solved): Problem 2. (20 points) Consider the following Markov Decision Process (MDP) with discount factor ...

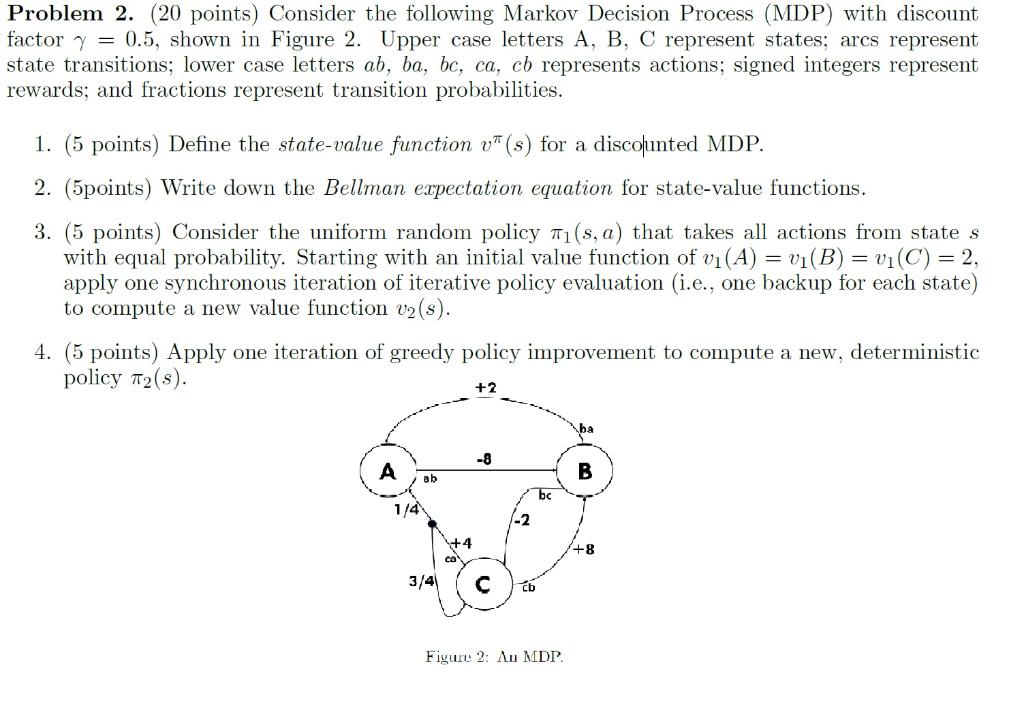

Problem 2. (20 points) Consider the following Markov Decision Process (MDP) with discount factor , shown in Figure 2. Upper case letters A, B, C represent states; arcs represent state transitions; lower case letters represents actions; signed integers represent rewards; and fractions represent transition probabilities. 1. (5 points) Define the state-value function for a discolunted MDP. 2. (5points) Write down the Bellman expectation equation for state-value functions. 3. (5 points) Consider the uniform random policy that takes all actions from state with equal probability. Starting with an initial value function of , apply one synchronous iteration of iterative policy evaluation (i.e., one backup for each state) to compute a new value function . 4. (5 points) Apply one iteration of greedy policy improvement to compute a new, deterministic policy Figure 2: ? MDP.