Home /

Expert Answers /

Statistics and Probability /

let-omega-mathfrak-f-mathrm-p-be-a-probability-space-and-let-mathbf-x-pa839

(Solved): Let \\( (\\Omega, \\mathfrak{F}, \\mathrm{P}) \\) be a probability space and let \\( \\mathbf{X}_{ ...

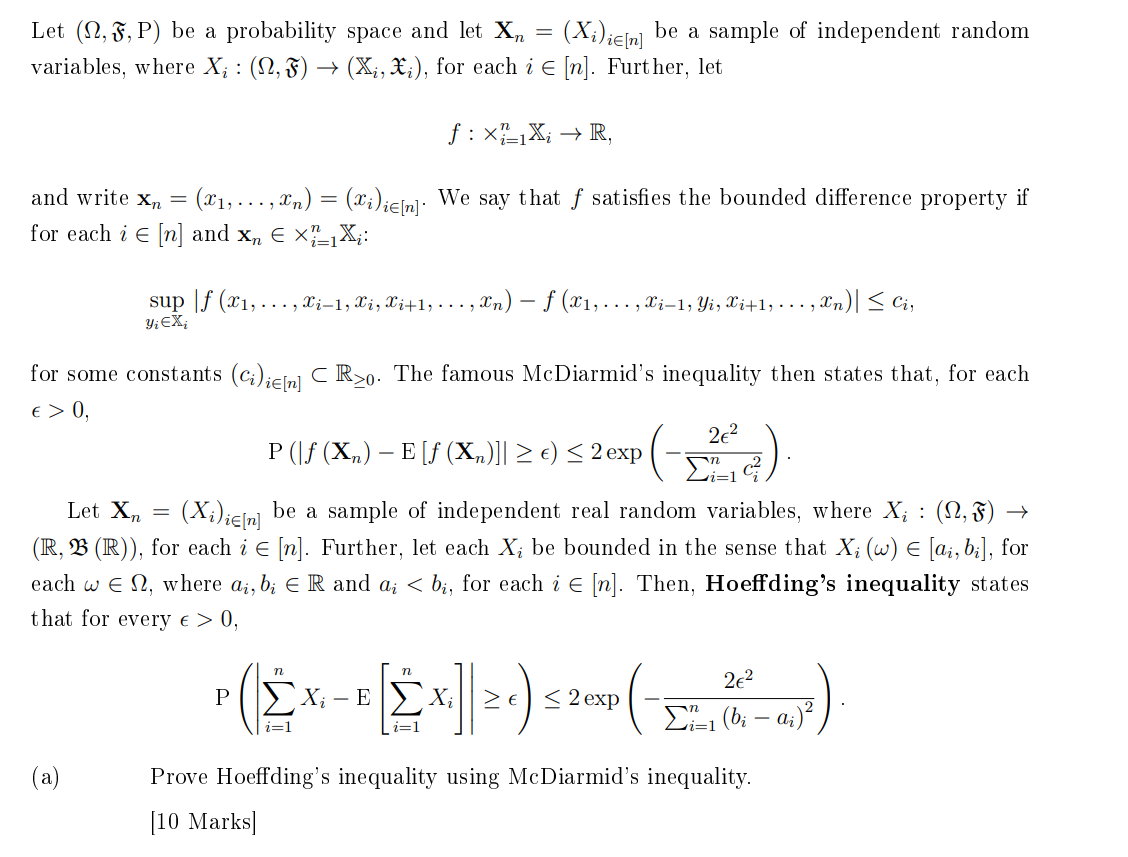

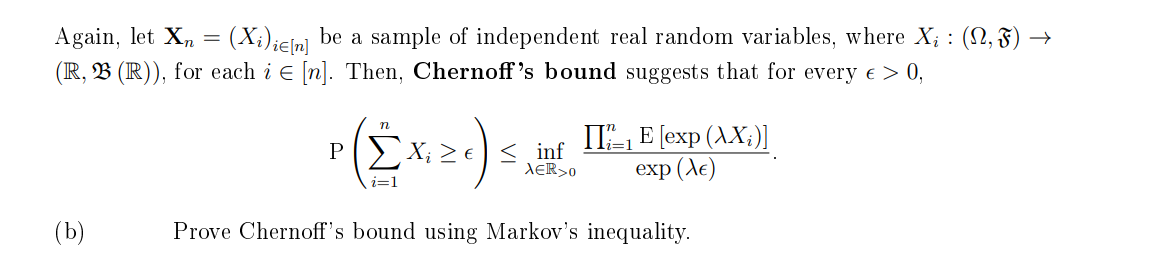

Let \\( (\\Omega, \\mathfrak{F}, \\mathrm{P}) \\) be a probability space and let \\( \\mathbf{X}_{n}=\\left(X_{i}\\right)_{i \\in[n]} \\) be a sample of independent random variables, where \\( X_{i}:(\\Omega, \\mathfrak{F}) \\rightarrow\\left(\\mathbb{X}_{i}, \\mathfrak{X}_{i}\\right) \\), for each \\( i \\in[n] \\). Further, let \\[ f: \\times_{i=1}^{n} \\mathbb{X}_{i} \\rightarrow \\mathbb{R} \\] and write \\( \\mathbf{x}_{n}=\\left(x_{1}, \\ldots, x_{n}\\right)=\\left(x_{i}\\right)_{i \\in[n]} \\). We say that \\( f \\) satisfies the bounded difference property if for each \\( i \\in[n] \\) and \\( \\mathbf{x}_{n} \\in \\times_{i=1}^{n} \\mathbb{X}_{i} \\) : \\[ \\sup _{y_{i} \\in \\mathbb{X}_{i}}\\left|f\\left(x_{1}, \\ldots, x_{i-1}, x_{i}, x_{i+1}, \\ldots, x_{n}\\right)-f\\left(x_{1}, \\ldots, x_{i-1}, y_{i}, x_{i+1}, \\ldots, x_{n}\\right)\\right| \\leq c_{i}, \\] for some constants \\( \\left(c_{i}\\right)_{i \\in[n]} \\subset \\mathbb{R}_{\\geq 0} \\). The famous McDiarmid's inequality then states that, for each \\( \\epsilon>0 \\) \\[ \\mathrm{P}\\left(\\left|f\\left(\\mathbf{X}_{n}\\right)-\\mathrm{E}\\left[f\\left(\\mathbf{X}_{n}\\right)\\right]\\right| \\geq \\epsilon\\right) \\leq 2 \\exp \\left(-\\frac{2 \\epsilon^{2}}{\\sum_{i=1}^{n} c_{i}^{2}}\\right) . \\] Let \\( \\mathbf{X}_{n}=\\left(X_{i}\\right)_{i \\in[n]} \\) be a sample of independent real random variables, where \\( X_{i}:(\\Omega, \\mathfrak{F}) \\rightarrow \\) \\( (\\mathbb{R}, \\mathfrak{B}(\\mathbb{R})) \\), for each \\( i \\in[n] \\). Further, let each \\( X_{i} \\) be bounded in the sense that \\( X_{i}(\\omega) \\in\\left[a_{i}, b_{i}\\right] \\), for each \\( \\omega \\in \\Omega \\), where \\( a_{i}, b_{i} \\in \\mathbb{R} \\) and \\( a_{i}0 \\), \\[ \\mathrm{P}\\left(\\left|\\sum_{i=1}^{n} X_{i}-\\mathrm{E}\\left[\\sum_{i=1}^{n} X_{i}\\right]\\right| \\geq \\epsilon\\right) \\leq 2 \\exp \\left(-\\frac{2 \\epsilon^{2}}{\\sum_{i=1}^{n}\\left(b_{i}-a_{i}\\right)^{2}}\\right) . \\] (a) Prove Hoeffding's inequality using McDiarmid's inequality. [10 Marks]\r\nAgain, let \\( \\mathbf{X}_{n}=\\left(X_{i}\\right)_{i \\in[n]} \\) be a sample of independent real random variables, where \\( X_{i}:(\\Omega, \\mathfrak{F}) \\rightarrow \\) \\( (\\mathbb{R}, \\mathfrak{B}(\\mathbb{R})) \\), for each \\( i \\in[n] \\). Then, Chernoff's bound suggests that for every \\( \\epsilon>0 \\), \\[ \\mathrm{P}\\left(\\sum_{i=1}^{n} X_{i} \\geq \\epsilon\\right) \\leq \\inf _{\\lambda \\in \\mathbb{R}_{>0}} \\frac{\\prod_{i=1}^{n} \\mathrm{E}\\left[\\exp \\left(\\lambda X_{i}\\right)\\right]}{\\exp (\\lambda \\epsilon)} . \\] (b) Prove Chernoff's bound using Markov's inequality.