Home /

Expert Answers /

Computer Science /

consider-the-following-simple-neural-network-i-e-standard-haykin-notation-with-3-inputs-1-hidde-pa525

(Solved): Consider the following simple neural network i.e., standard Haykin notation with 3 inputs, 1 hidde ...

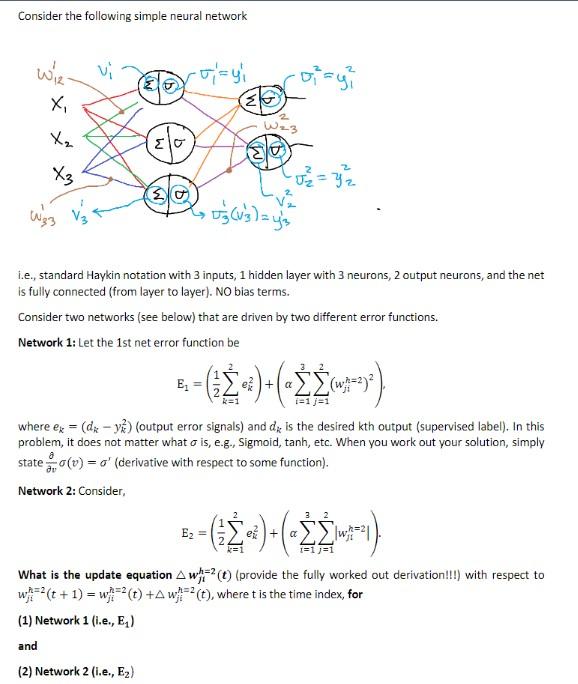

Consider the following simple neural network i.e., standard Haykin notation with 3 inputs, 1 hidden layer with 3 neurons, 2 output neurons, and the net is fully connected (from layer to layer). NO bias terms. Consider two networks (see below) that are driven by two different error functions. Network 1: Let the 1st net error function be \[ \mathrm{E}_{1}=\left(\frac{1}{2} \sum_{k=1}^{2} e_{k}^{2}\right)+\left(\alpha \sum_{i=1}^{3} \sum_{j=1}^{2}\left(w_{j i}^{h=2}\right)^{2}\right) \] where \( e_{k}=\left(d_{k}-y_{k}^{2}\right) \) (output error signals) and \( d_{k} \) is the desired kth output (supervised label). In this problem, it does not matter what \( \sigma \) is, e.g., Sigmoid, tanh, etc. When you work out your solution, simply state \( \frac{\partial}{\partial v} \sigma(v)=\sigma^{s} \) (derivative with respect to some function). Network 2: Consider, \[ \mathrm{E}_{2}=\left(\frac{1}{2} \sum_{k=1}^{2} e_{k}^{2}\right)+\left(\alpha \sum_{i=1}^{3} \sum_{j=1}^{2}\left|w_{j 1}^{h=2}\right|\right) \] What is the update equation \( \Delta w_{j i}^{h=2}(t) \) (provide the fully worked out derivation!!!) with respect to \( w_{j i}^{h=2}(t+1)=w_{j i}^{h=2}(t)+\Delta w_{j i}^{h=2}(t) \), where \( t \) is the time index, for (1) Network 1 (i.e., \( E_{1} \) ) and (2) Network 2 (i.e., E \( \mathrm{E}_{2} \) )

Expert Answer

The solution for the given question is First, we have to talk about neurons, the basic unit of a neural network. A neuron takes inputs, does some math